Object detection is more than just a technological feat; it's the cornerstone of modern computer vision. By merging localization and classification, it allows for the precise identification and positioning of elements within images or video streams. This capability has become indispensable across a multitude of sectors: public safety, industrial automation, medical diagnostics, advanced robotics, and even for the autonomous cars navigating our roads with increasing confidence. Imagine accelerated medical diagnoses, intelligent production lines, and enhanced security. All this, and much more, is made possible by object detection. In this article, we will delve deep into one of the most iconic algorithms in this field: YOLO.

YOLO, an acronym for "You Only Look Once," is an object detection algorithm that marked a breakthrough. Its distinctive feature? Analyzing images in a single pass, which drastically reduces processing time without sacrificing performance. This combination of efficiency and accuracy has propelled YOLO to the ranks of reference models in computer vision. With constant evolution driven by an active community and players like Ultralytics, YOLO continues to push boundaries, as evidenced by research into advanced versions like YOLOv12.

YOLO's evolution is closely tied to its successive leading figures. Initially championed by Joseph Redmon, creator of YOLOv1 to YOLOv3 under the Darknet framework, the project took a major turn when Redmon withdrew from computer vision research in early 2020 for ethical reasons. This withdrawal didn't spell the end for YOLO, but rather the beginning of a new era of diverse contributions. While researchers like Alexey Bochkovskiy and his team brilliantly ensured continuity with YOLOv4, extending the Darknet legacy, the ecosystem was ripe for an approach focused on greater accessibility. It was in this context that Ultralytics, under the leadership of Glenn Jocher, truly catalyzed the democratization of YOLO. By re-implementing and extending YOLO's fundamental concepts natively in PyTorch with models like YOLOv5, then YOLOv8, Ultralytics not only delivered cutting-edge performance but, more importantly, drastically lowered the barrier to entry. Their framework simplified the training, customization, and deployment of YOLO models, making them accessible to a global community of developers, researchers, and businesses.

In computer vision, interpreting and locating objects in images rely on sophisticated algorithms. Two main approaches have long coexisted, each with its strengths and weaknesses.

CNNs excel in their speed of analysis. They operate by applying filters to the image to progressively identify simple elements (edges, shapes, etc.) and then more complex combinations (eyes, wheels, etc.). This method, highly efficient and fast due to optimized calculations, forms the basis of many vision algorithms, including early versions of YOLO. However, CNNs can struggle to grasp complex relationships between distant objects or the overall context of an image as accurately as other techniques. For example, to recognize a "soccer ball," a CNN might find it difficult to link it to the presence of a soccer goal located on the other side of the image.

More recently, Transformers, with their attention mechanism, have generated great interest. Attention allows the algorithm to assess the relative importance of different parts of an image (or text) to each other. This gives it the ability to connect distant information and focus on what's essential to interpret complex scenes with high accuracy. Revisiting the "soccer ball" example, a Transformer can give more importance to the presence of a soccer goal located on the other side of the image. The major drawback of Transformers is their high demand for computing power, often making them slower than CNNs, which can be problematic for real-time applications. Among Transformer models, DETR is currently one of the best computer vision models. In comparison, the medium version of the YOLOv12 series is 3 times smaller than DETR.

YOLO's creators sought to combine the advantages of these two approaches. While retaining the efficient CNN architecture for speed, they integrated lighter, optimized attention mechanisms. The goal is not to systematically use the "heavy" attention of Transformers, but to apply it in a targeted and ingenious way. For instance, thanks to the Localized Attention (i.e., "Area Attention") architecture, YOLO learns to focus its attention on specific areas of the image by segmenting it and identifying regions that require more in-depth analysis, without having to calculate relationships between all pixels. Other technical optimizations, such as "Flash Attention" or network simplification, help make the use of attention compatible with YOLO's characteristic speed.

In summary, YOLO strikes a clever compromise by leveraging the speed of CNNs for global analysis and integrating elements of contextual intelligence inspired by Transformers. This allows it to improve detection accuracy in complex scenes without sacrificing its speed, much like a fast athlete who also develops observational and strategic skills.

YOLO's versatility and speed have opened doors in numerous sectors, transforming complex challenges into tangible solutions.

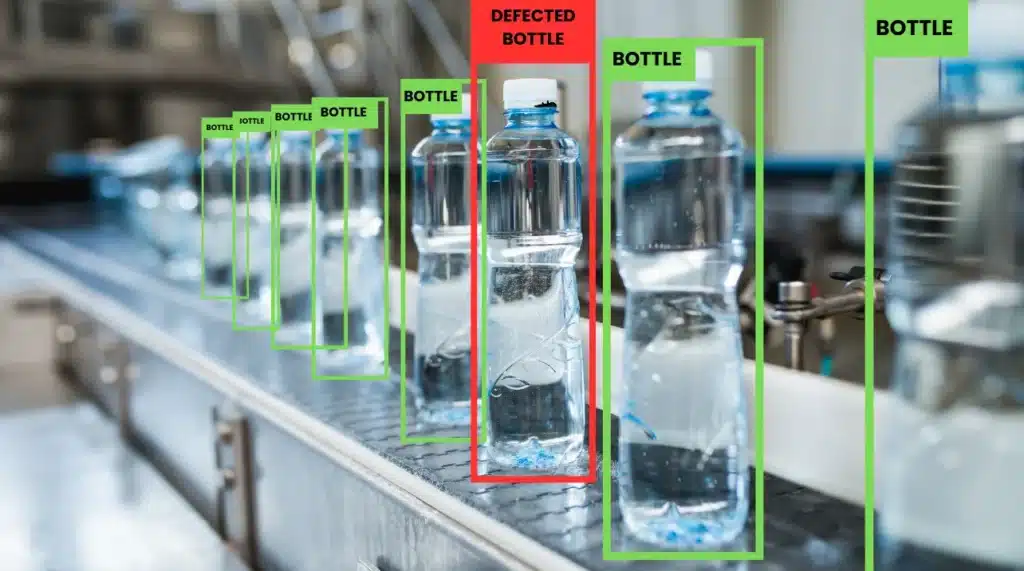

In the manufacturing sector, YOLO automates quality control by spotting defects on production lines, improving efficiency, and reducing costs.

Autonomous driving demands flawless environmental perception. YOLO detects pedestrians, vehicles, signage, and obstacles, ensuring safer and more reliable navigation.

YOLO revolutionizes surveillance by analyzing video streams to identify suspicious behavior, intrusions, or abandoned objects, enhancing the security of property and people.

In agriculture, YOLO helps identify plant diseases, detect weeds, or monitor livestock, optimizing yields and promoting sustainable farming.

Speed and accuracy are vital in medicine. YOLO assists practitioners by detecting anomalies in medical images (X-rays, MRIs), such as tumors or fractures, for quicker diagnoses.

During disasters, a rapid damage assessment is crucial. YOLO, by analyzing aerial or satellite imagery (e.g., for rural housing censuses in disadvantaged countries), helps coordinate relief efforts and efficiently deliver aid. This is one of the projects led by Aqsone Lab, participating in the Disaster Vulnerability Challenge proposed by the Zindi platform.

The Ultralytics framework is designed to simplify and accelerate the development of computer vision solutions. By providing a comprehensive set of pre-built tools, pre-trained models, and modular features, it allows researchers and developers to focus on the specific aspects of their projects rather than building foundations from scratch. Let's explore some of the key tools and benefits offered by this powerful framework in detail.

To ensure a model's robustness, training on a large and varied volume of data is essential. To increase this diversity without acquiring new images, data augmentation techniques are used. These include classic transformations (cropping, flipping, rotation, brightness and contrast modification) as well as more sophisticated methods like Mosaic (combining four images into one) or MixUp (weighted mixing of images and their labels). Applying these techniques exposes the model to a wider range of situations, thereby improving its ability to generalize.

During the inference (prediction) phase, Test-Time Augmentation (TTA) can improve performance. It involves applying multiple transformations to the test image, obtaining predictions for each transformed version, and then aggregating these predictions (e.g., by majority vote or averaging). Although more time-consuming, this method can significantly increase the model's precision and recall.

The Python library provided by Ultralytics is not limited to object detection. Their framework extends YOLO's capabilities to:

YOLO has established itself as an indispensable object detection algorithm, combining speed, accuracy, and remarkable adaptability. Its innovative architecture and constant evolution, driven by a dynamic scientific community and industrial players, allow it to excel in an impressive variety of fields. Continuous advancements, such as those explored with models like YOLOv12-turbo (see performance graph on the COCO dataset, a reference dataset for object detection), promise new capabilities.

The flexibility offered by frameworks like Ultralytics, with its advanced features for segmentation, pose estimation, or oriented detection, makes YOLO much more than a simple detector: it's a truly versatile toolkit for computer vision. As artificial intelligence continues to advance, YOLO and its derivatives are undoubtedly destined to redefine standards further, paving the way for ever more intelligent and powerful applications.